Chromebook to Get AI Upgrade for Free

Google has announced two new devices for its Chromebook lineup — the Samsung Galaxy Chromebook Plus and the Lenovo Chromebook Duet 11-inch — along with a hefty list of new AI upgrade features for both new and existing Chromebooks.

With a 15.6-inch OLED display, 256GB of storage, an Intel Core 3 100U (Raptor Lake-R) processor, and 8GB of RAM, the Samsung Galaxy Chromebook Plus is a powerful laptop. At less than half an inch in thickness and 2.58 pounds in weight, it is both lighter and thinner than earlier models.

First of its kind

Additionally, it is the first Chromebook to come equipped with Google’s Quick Insert key, a reimagining of the Copilot key. It appears as a menu of shortcuts to features like Help Me Write, emoji and GIFs, a list of recently accessed webpages, Google Drive search, and tools to perform basic arithmetic or convert units. It is situated between the tab and shift keys.

The AI Upgrade Aim

The objective is to enable access to Gemini AI, useful links, and files without hopping between windows. Launcher Key + F is a keyboard shortcut that allows any user of a Chromebook to test out the feature, even if the physical button is exclusive to the new Samsung Galaxy. Google also says that in the future, the Quick Insert menu will have the capability to create AI images.

The Lenovo Chromebook Duet is a tablet PC featuring a 10.95-inch WUXGA touchscreen display with 400 nits of brightness and an integrated kickstand. For sketching, it features palm rejection technology; however, the USI Pen 2 stylus is offered separately.

Recommended: Samsung Galaxy S25 Ultra’s Camera Upgrades Leak

With up to 8GB of RAM and 128GB of storage, it is powered by a MediaTek Kompanio 838 CPU. It also has a 5MP front-facing camera and an 8MP rear-facing camera. Seemingly intended for kids, Google has partnered with Goodnotes to enhance its app for Chromebooks that support styluses.

New AI features for Chromebooks

Along with these new gadgets, Google is also updating Chromebook Plus computers in October to include a suite of new artificial intelligence functions. Help Me Read is a tool that summarizes content, Live Translate subtitles for videos translated by Google AI, an AI-powered Recorder app that transcribes audio, and features that improve video calls.

The largest upgrade appears to be Help Me Read. It can, as anticipated, swiftly summarize information for you. In addition, you can ask questions about the text and it will highlight important dates and details from the content. Any text on your screen can be used with this, and all it takes is a right-click to activate the Help Me Read feature. Another significant innovation is Live Translate, which functions on any platform that has captions, such as Twitch and YouTube. Out of the gate, Live Translate is accessible in over 100 languages.

Recommended: Google Pixel is Officially Obtainable in Poland and the Czech Republic

New Functions Revealed

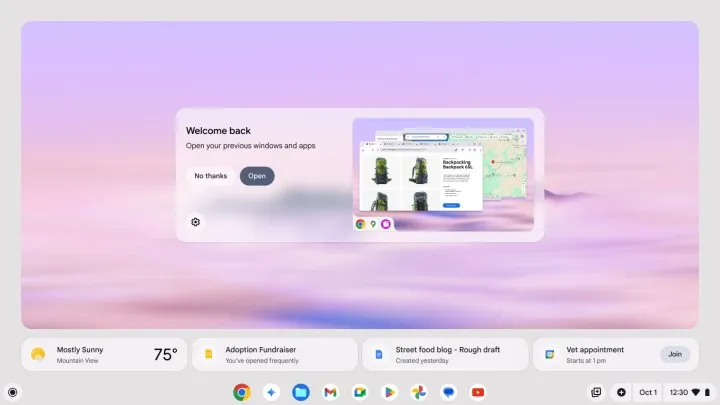

Additionally, there is a list of new AI functions for Chromebook owners that use standard models. This covers Focus, Pin, Welcome Recap, and Chat with Gemini. Whenever you log in, a pop-up called “Welcome Recap” will provide you with a summary of your most recent activities on your device. Pin allows you to pin crucial files to your home screen, and Focus will automatically activate Do Not Disturb when you play specific music or after a predetermined amount of time.

Recommended: Euro Truck Simulator 2 – ProMods 2.71 Map Collection Released

Throughout October, free upgrades for Chromebook and Chromebook Plus devices will be available. Google is giving you free three months of Google One AI Premium, which includes access to Gemini Advanced and two terabytes of Google Drive storage if you’re just picking up a Chromebook (our list of the greatest Chromebooks can assist). Additionally, Google is continuing to offer Chromebook Plus buyers a free year of Google One AI Premium.